Beyond the Code: Why AI Agents Are BreakingYour Operations, Not Your Architecture

The AI agent revolution has arrived with a paradox: while these autonomous systems promise unprecedented automation, they’re exposing fundamental gaps in how we think about infrastructure, governance, and operations. The problem isn’t that your architecture can’t support AI agents; it’s that your platform wasn’t designed for the new operational realities they create.

The Silent Infrastructure Crisis

Traditional software architectures were built for predictability. A request comes in, code executes, and a response goes out. Clean, deterministic, and debuggable. AI agents have shattered this model, and most organizations are still trying to fit this new reality into old frameworks.

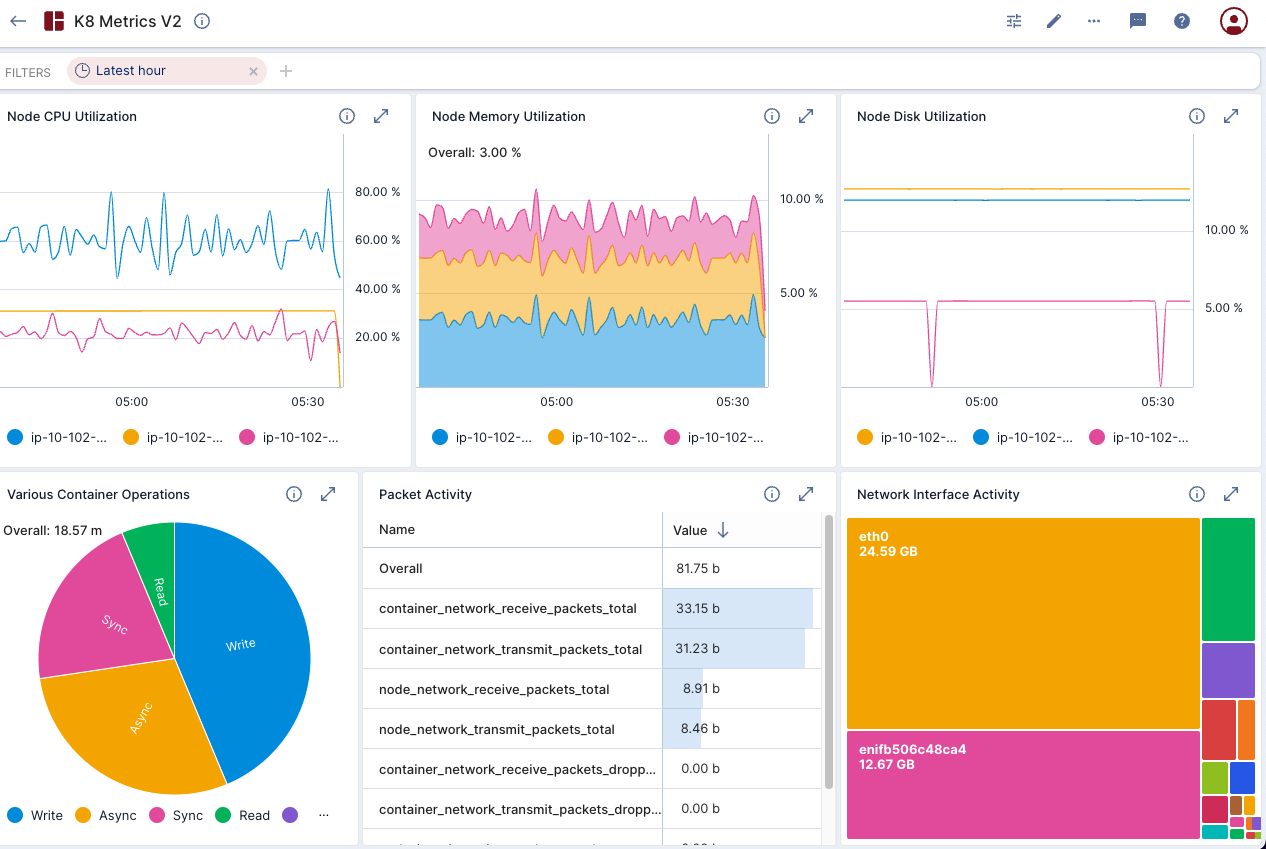

The core disconnect isn’t architectural… it’s operational. Your Kubernetes clusters can handle agent workloads. Your APIs can serve LLM requests. But when an agent burns through your monthly token budget in three hours, or when debugging why it made a specific decision requires reconstructing an interaction from three days ago, you realize the infrastructure was never the bottleneck.

Consider the economics: agents don’t just consume tokens, they multiply them. A single customer query that would take 500 tokens in a traditional chatbot can spiral into 15,000 tokens as an agent reasons through multiple steps, calls various tools, and iterates on solutions. Reasoning tokens and agentic tokens represent entirely new cost categories that existing infrastructure monitoring wasn’t designed to handle.

The Real Challenges: Five Breaking Points

When your AI agent fails, you’re often left with nothing but a generic error log and frustrated users. Unlike traditional applications where you can trace a request through your stack, agent failures aremaddeningly opaque.

The observability trilemma hits hard: You can have completeness (capturing all data), timeliness (seeing it when needed), or low overhead (not disrupting your system)… but rarely all three simultaneously. In distributed agent networks, this challenge intensifies as decisions cascadeacross multiple models, tool calls, and data sources.

Traditional debugging assumes determinism. The same input produces the same output. Agents obliterate this assumption. A prompt that worked yesterday might fail today because the agent’s memory state changed, because a tool returned different data, or because the LLM simply decided to reason differently.

Production debugging becomes archaeological work. Teams spend days trying to reproduce issues that happened once, in a specific context, with a particular memory state… conditions that may never align again. Without comprehensive tracing that captures the full execution history, unique request IDs, and context at every decision point, you’re debugging blind.

The hidden cost is context pollution. Agents don’t “think”… they function within a limited, fleeting token window where all elements converge: prompts, retrievals, tool outputs, and memory updates.When this context overflows or becomes polluted with irrelevant information, even the most sophisticated model produces suboptimal results.

Organizations are discovering that effective context engineering is the difference between a demo and production deployment. This requires treating context as a first-class architectural concern, not an afterthought. Schema-driven state isolation, context compression layers, and selective memory retrieval become essential patterns.

Token economics force hard choices. Every piece of contex t consumes tokens. Every token costs money. The pressure to minimize context while maintaining effectiveness creates a constant optimization tension. Companies that master context engineering can reduce token usage by 30-70%, fundamentally changing the economics of agent deployment.

Agents require both short-term episodic memory (current session context) and long-term semantic memory (knowledge that persists across sessions). Most implementations treat memory as an afterthought, leading to agents that either forget critical information or get overwhelmed by irrelevant historical data.

The experience-following problem is particularly insidious. When agents retrieve similar past experiences, they often repeat past mistakes… error propagation that compounds over time. Without sophisticated memory management that includes quality scoring, relevance filtering, and dynamic organization, memory systems become a liability rather than an asset.

Research shows that memory isn’t just about storage… it’s about intelligent curation. Agents need systems that can dynamically organize memories in an agentic way, creating interconnected knowledge networks through dynamic indexing and linking, allowing the memory network to continuously refine its understanding as new experiences are integrated.

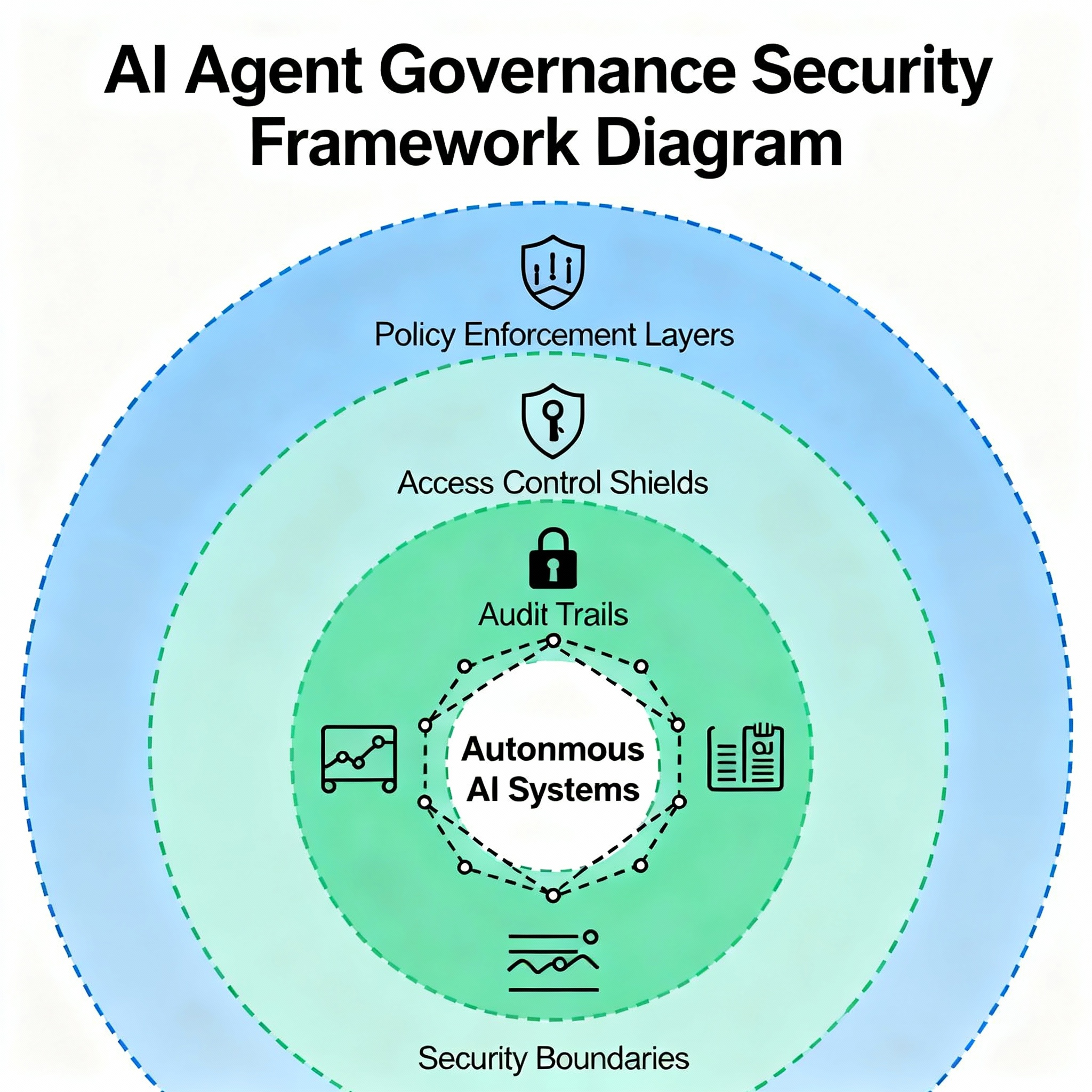

Security teams are discovering that AI agents don’t fit existing security models. Traditional security assumes humans make decisions and software executes them. Agents collapse this distinction, becoming autonomous actors that make decisions, access data, and take actions.

The prompt injection threat is particularly concerning. Agents can be manipulated through carefully crafted inputs; not just in user prompts, but through any data they retrieve from external sources. An agent that processes customer emails, searches internal databases, and takes actions based on what it finds creates an attack surface that’s orders of magnitude larger than traditional applications.

Organizations need governance frameworks that treat agents as first-class citizens in their security model. This means establishing clear permissions, implementing policy enforcement at the agent level, maintaining comprehensive audit trails, and designing systems that can detect and prevent malicious behavior in real-time.

Role-based access control isn’t enough. Agents operate across boundaries, making decisions that touch multiple systems and data sources. Traditional RBAC assumes static permissions for known workflows. Agents create dynamic workflows that don’t exist until runtime.

Perhaps the most underestimated challenge is what Gartner calls “agentic traffic”… the autonomous outbound API calls that agents make on their own.

This reverses the traditional API model. Instead of managing inbound traffic through API gateways, organizations must now govern outbound calls that agents autonomously generate. These calls happen in response to reasoning processes that humans didn’t explicitly trigger, consuming APIs and services in patterns that infrastructure wasn’t designed to handle.

Early adopters report three critical problems:

- Unpredictable costs:

Agents spiral into runaway loops, racking up LLM and API usage unnoticed. A single misbehaving agent can trigger budget blowouts by repeatedly calling external services. - Security risks:

Agents with broad credentials can be tricked via prompt injection into leaking private data or accessing unauthorized resources. - No observability or control:

Without proper telemetry, teams lack visibility into what agents are doing, why they’re doing it, or how to intervene when things go wrong.

The Missing Layer: AI Gateways and Governance Infrastructure

The emerging solution isn’t more sophisticated agents… it’s infrastructure that treats agent behavior as a first-class operational concern.

AI gateways are emerging as the critical missing layer. Like API gateways evolved to manage microservices traffic, AI gateways provide a control plane for agentic traffic. They sit between agents and the services they call, enforcing policies, providing observability, and optimizing usage.

Key capabilities include:

Organizations implementing AI gateways report moving from chaotic, ungoverned agent deployment to controlled, observable systems. The gateway becomes the enforcement point for organizational AI policies, the visibility layer for agent behavior, and the optimization engine for cost management.

Platform Engineering for the Agentic Era

Platform engineering teams are discovering they need to evolve from building tools for humans to building ecosystems for both humans and agents.

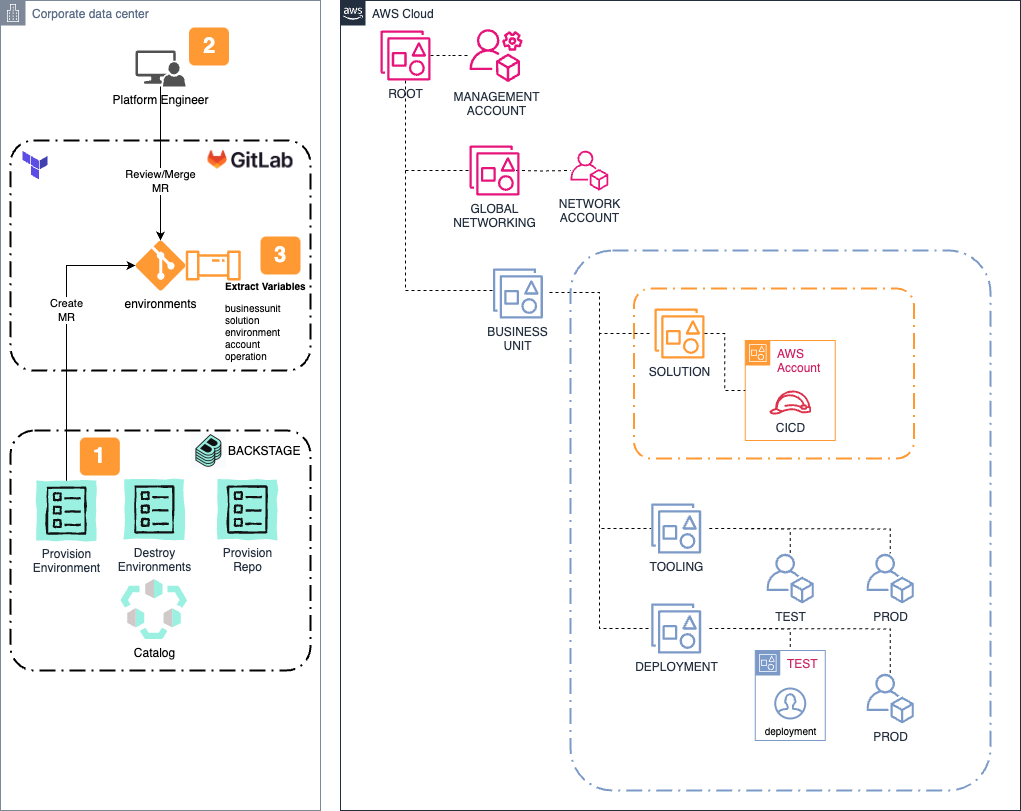

The shift is fundamental: Platforms must provide not just self-service capabilities but also the context, guardrails, and interfaces that allow agents to operate as trusted team members. This includes curating tools, defining agent roles, managing feedback loops, and governing access to environments and systems.

Key principles emerging from successful implementations:

The Architecture That Actually Matters

The successful agent deployments share common architectural patterns that differ significantly from traditional application architecture:

The Economics of Getting It Right

Organizations that address these operational challenges early are discovering dramatic advantages. The economics shift from “agents are too expensive” to “agents are our competitive advantage.”

Building the Foundation: Practical Steps

For organizations ready to move beyond proof-of-concept agent deployments, the path forward is clear:

The Agent-Native Future

The organizations winning with AI agents aren’t the ones with the best models or the most sophisticated prompts. They’re the ones that recognized early that agents require fundamentally different operational infrastructure.

The parallel to microservices is instructive. When microservices emerged, many organizations struggled until service meshes, API gateways, and observability platforms matured. The same evolution is happening with agents… but faster.

We’re moving from a world where AI is a feature to a world where AI agents are teammates. This requires infrastructure that can support, monitor, govern, and optimize agent behavior at scale. The technical challenges are significant, but they’re solvable with the right operational mindset.

The key insight: your architecture is probably fine. Your operations need transformation.

Start building the observability, governance, and platform capabilities that agents require. Treat agentic traffic as a first-class infrastructure concern. Invest in the tooling that makes agent behavior transparent, controllable, and optimizable.

The agent era demands operational excellence at a level most organizations haven’t yet achieved. But for those willing to build the right foundation, the competitive advantages are profound. Agents that can operate safely, efficiently, and transparently at scale will define the next generation of software systems.

The question isn’t whether your architecture can support agents. It’s whether your operations are ready for what they’ll demand.